Reviewing the explainable artificial intelligence (XAI) and its importance in tax administration

Introduction

Two years ago, we published a post on this CIAT blog highlighting the growing importance of XAI for the development of Artificial Intelligence (AI) applications in some areas of society, especially in tax administrations [Seco, 2021]. The proposal of this post is to update in general what happened in some issues related to the XAI and provide references for more in-depth studies by those interested.

Explainable AI or XAI deals with the development of techniques/models that make the operation of an AI system understandable to a certain audience.

Usually, the “black box” models, such as neural networks, fuzzy modeling (fuzzy logic) and the gradient increase (gradient boosting) provide flexibility and greater predictive accuracy while sacrificing interpretability. The “white box” models have simpler methods, such as linear regression and decision trees; however, they tend to have weaker predictive power [Loyola-González, 2019]. Additionally, Myrianthous (2023) indicates that more flexible models do not guarantee better results, depending on the phenomenon called overfitting: when, by accompanying the noises and training data errors too closely, they no longer manage to generalize new, unnoticed data points properly.

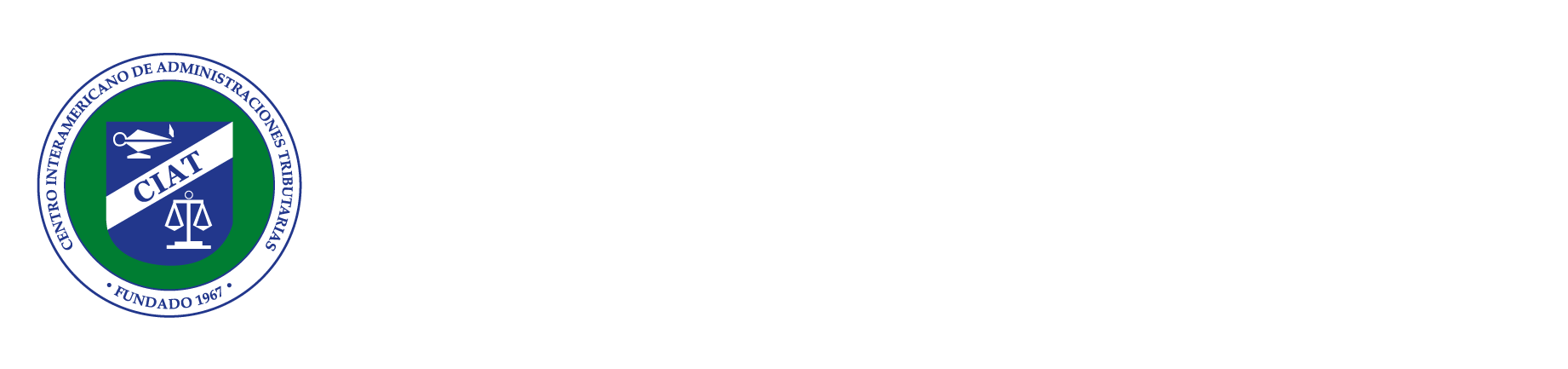

Figure 1 shows the ”explanation” located in the implementation flow of AI solutions:

Figure 1: Source – [Fettke, 2023]

So far, the terms interpretability and explainability are usually treated as synonyms in the field of AI. Recent works propose different meanings: interpretability would define that AI models are intelligible by their nature, such as decision trees (by following paths in the tree, the user easily accompanies a mathematical formula); explainability would correspond to a black box type AI model that was understood using external resources, such as visualizations [Kuzniacki, 2021].

Relevance

Kuzniacki and Tylinski (2020) show that the biggest source of risks for AI integration to the legal process in Europe are the General Data Protection Regulations-GDPR[1] and the European Convention on Human Rights-ECHR[2]. The legal articles mentioned by the authors are as follows:

The right to an explanation (articles 12, 14 and 15 of the GDPR): This right deals with the transparency of an AI model. If the tax authorities decide based on an AI model, they should be able to explain to taxpayers how this decision was made, providing sufficiently complete information so that they can take action to challenge the decision, correct inaccuracies or request its cancellation.

The right to human intervention (Article 22 of the GDPR): The right to human intervention is a legal tool to challenge decisions that are based on data processed through automatic means, such as AI models. The idea is that taxpayers should have the right to challenge an automated decision of the AI model and have it reviewed by a person (usually a tax auditor). For this to be effective, the taxpayer must first know how the model reached a conclusion (returning then to the right to an explanation).

The right to a fair trial (article 6 of the ECHR): This right covers the minimum guarantees of equality and the right to a defence. It means that taxpayers should be allowed to effectively review the information on which the tax authorities base their decisions. They should have the means to comprehensively understand the relevant legal factors used to decide on a tax law application and the logic behind the AI model that prompted the authorities to make a certain decision.

The prohibition of discrimination and protection of property (ECHR): The prohibition of discrimination together with the protection of property determines that the tax authorities should issue decisions in a non-discriminatory manner, i.e., that they do not treat taxpayers differently without objective and reasonable justification. This requirement seeks to address the tendency that certain AI algorithms have to cope with biases, as a result of the imbalance of the data that was used to train them.

Considering that European regulations serve as the basis for many countries to develop their own legislation, these observations are important discussing the topic.

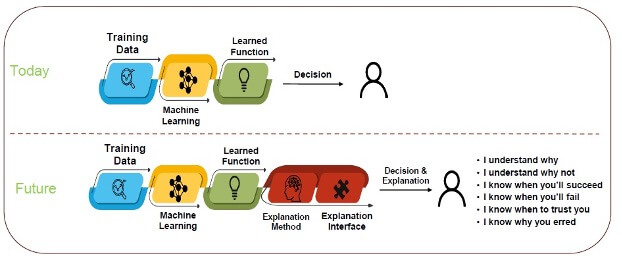

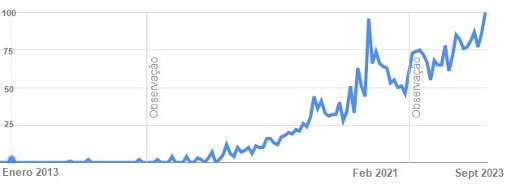

At the global level, the growing relevance of the XAI can be observed by (a) the evolution of the Popularity Index of Google Trends[3] for the term “Explainable AI” in the last 10 years (Figure 2) and (b) the evolution of the number of articles on AI interpretability and explainability submitted to the conceptualized NEURIPS Conference between 2015 and 2021 (Figure 3):

Figure 2: Evolution of the search for “Explainable AI” on Google (source: Google Trends)

Figure 3: Evolution of the number of accepted papers on interpretability and explainability at the NEURIPS Conference 2015-2021 – Source: [Ngo and Sakhaee, 2022]

Methods of explainability

In parallel with AI, methods that explain and interpret machine learning models are also thriving.

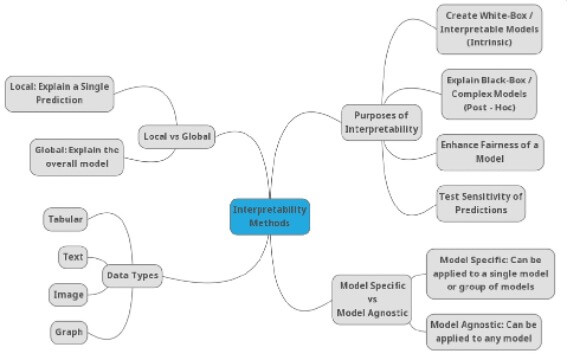

In Linardatos et al. (2021) a mind map for the taxonomy of machine learning interpretability techniques is proposed, based on the objectives for which these methods were created and the ways through which they achieve this purpose (Figure 4).

Figure 4: Mind map of the taxonomy of interpretability techniques for machine learning (Source: Linardatos et al., 2021)

In this taxonomy, interpretability methods can be divided according to the types of applicable algorithms: if their application is restricted only to a specific family of algorithms, then these methods are called model-specific; on the contrary, methods that could be applied to all algorithms are called agnostic (model-independent).

In addition, 4 categories of interpretability methods are introduced based on the purpose for which they were created and the ways in which they achieve this purpose: methods to explain complex black box models; methods to create white box models; methods that promote equity and restrict the existence of discrimination; and methods to analyze the sensitivity of the models’ predictions.

XAI and tax audits

XAI is not considered as a monolithic concept and the adoption of a single model for public administration is unreasonable.

According to Mehdiyev et al. (2021), the sufficiency and relevance of the explanations are determined by the properties of the decision-making environment, including the characteristics of the user, the objectives of the explanation mechanisms, the nature of the underlying processes, the credibility and the quality of the predictions generated by the adopted AI systems.

Therefore, in order to develop solutions that generate relevant explanations for public administration processes, it is important to identify and describe the elements of these various factors and examine their interdependencies.

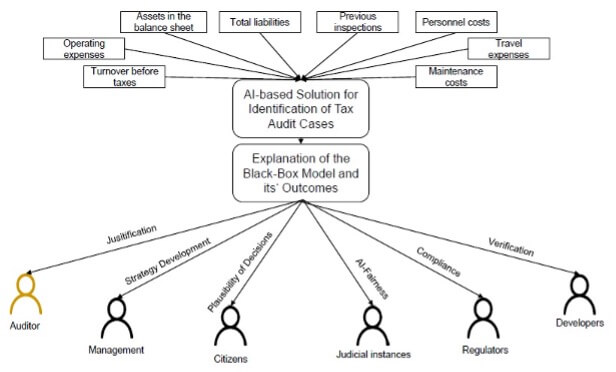

The previous reference proposes a conceptual model describing the main elements for an XAI solution in a use case of tax audit, which can be adapted for other uses in public administration.

In this model, the stakeholders would be auditors, managers, citizens, judicial authorities, regulatory authorities and AI developers. These could be further classified with other more detailed factors, such as AI expertise and experience with the subject. These users require explanations with different objectives, such as: verification of a trace of reasoning in the adopted AI methods; justification/ratification of the reliability in the results of the generated system; debugging of the underlying AI models to improve accuracy and computational efficiency; learning from the system, especially in the absence of domain knowledge; improving the effectiveness or efficiency to make good decisions quickly, etc.

After evaluating the characteristics of stakeholders’ processes, interdependencies and aspects related to decision making, the referenced study identified several scenarios for a use case in tax audit, which would demand solutions other than XAI. Figure 5 summarizes these scenarios.

Figure 5: XAI scenarios for tax audit (source: Mehdiyev, N., et al., 2021)

The various scenarios proposed are examples of the multiplicity of explanatory solutions that can exist in a single use case (in this case, tax audit), highlighting the diversity of the audience that requires explanation, their motivation/needs and other requirements.

It is important to note that the levels of explainability of AI are divided into global, which explain the model in general and its generic rules of operation; and local, which explain for each data how the model reasoned and the rules that led to a certain result.

The explainability techniques are many and are chosen according to the need and/or feasibility of use. Some examples: SHAP (SHapley Additive ExPlanations), LIME (Local Interpretable Model-agnostic Explanation), Permutation Importance, Partial Dependence Plot. Dallanoce (2022) describes the main available techniques, at a level suitable for non-experts.

AI algorithms in tax administration: is explainability always necessary?

The application of the explainability of AI algorithms is especially linked to the relations between governments and citizens (G2C) and governments and companies (G2B).

This is because providing explanations at this level is mandatory, while for decisions in the private sphere it is mandatory only when the law requires it. In both cases, lawyers and data scientists must work together [Kuzniacki, 2021].

When explainability is necessary, Kuzniacki also recommends that, when modeling AI based on tax legislation, human experts in the field should be kept informed, not relying totally on models, in order to mitigate risks arising from the lack or deficiency of explainability.

Final comments

Although the importance of the topic is recognized, the approach that tax administrations should adopt with respect to the use of XAI remains an open question, motivating academic and institutional research. Also, at an event held at the University of Amsterdam[4], Dr. Peter Fettke proposed four guidelines for the understanding and design of XAI for tax systems:

Guideline 1: Developing a tax model.

Guideline 2: Communicate explanations.

Guideline 3: Generate and evaluate explanations.

Guideline 4: Developing and evaluating a machine learning model.

XAI methods can contribute to develop accountability, fairness, transparency and avoid discriminatory bias in public administration, as well as provide better acceptance and trust in AI systems.

Research in the XAI area could also be integrated into the work of the CIAT Advanced Analytics Center[5], considering the context of Latin America and the Caribbean, in order to generate guidelines for the tax administrations of the region.

Thus, the XAI is another matter to be considered in the universe of the digitalization of tax administrations and good fiscal governance.

Bibliography

Dallanoce, F. 2022. “Explainable AI: A Comprehensive Review of the Main Methods.” Medium January 4. Available at: https://medium.com/mlearning-ai/explainable-ai-a-complete-summary-of-the-main-methods-a28f9ab132f7

Fettke, P. 2023. “Explainable Artificial Intelligence (XAI) Supporting Public Administration Processes. On the Potential of XAI in Tax Audit.” Conference at the University of Amsterdam / Amsterdam Centre for Tax Law. March

Kuzniacki, B. 2021. “Explainability in Artificial Intelligence & (Tax) Law”. International Law and Global South. June 3 Available at: https://internationallawandtheglobalsouth.com/guest-post-explainability-in-ai-and-international-law-with-specific-reference-to-tax-law/

Kuzniacki, B., Tylinski, K. 2020. “Legal Risks Stemming from the Integration of Artificial Intelligence (AI) to Tax law.” Kluer International tax blog. Available at: kluwertaxblog.com/2020/09/04/legal-risks-stemming-from-the-integration-of-artificial-intelligence-ai-to-tax-law/

Linardatos, P., Papastefanopoulos, V., Kotsiantis, S. 2021. “Explainable AI: A Review of Machine Learning Interpretability Methods.” Entropy 23, 18. https://dx.doi.org/10.3390/e23010018

Loyola-González, O. 2019. “Black-Box vs. White-Box: Understanding Their Advantages and Weaknesses From a Practical Point of View” in IEEE Access, vol. 7, pp. 154096-154113, 2019, https://doi.org/10.1109/ACCESS.2019.2949286

Mehdiyev, N., Houy, C., Gutermuth, O., Mayer, L., Fettke, P. 2021. “Explainable Artificial Intelligence (XAI) Supporting Public Administration Processes – On the Potential of XAI in Tax Audit Processes.” In: Ahlemann, F., Schütte, R., Stieglitz, S. (eds) Innovation Through Information Systems. WI 2021. Lecture Notes in Information Systems and Organisation, vol 46. Springer, Cham. https://doi.org/10.1007/978-3-030-86790-4_28

Myrianthous, G. 2023. “Understanding The Accuracy-Interpretability Trade-Off”. Towards Data Science. Oct 2020 Available at: https://towardsdatascience.com/accuracy-interpretability-trade-off-8d055ed2e445

Ngo, H., Sakhaee, E. 2022. “AI Index Report 2022: Chapter 3 – Technical AI Ethics”. Stanford University

Seco, A. 2021. “Explainable Artificial Intelligence (XAI) and its Importance in Tax Administration”. CIAT Blog 01 November. See: https://www.ciat.org/inteligencia-artificial-explicable-xai-y-su-importancia-en-la-administracion-tributaria/

[2] See https://www.echr.coe.int/Documents/Convention_ENG.pdf

[3] Google Trends Popularity Index

[4] Conference: “Towards Explainable Artificial Intelligence (XAI) in Taxation: The Future of Good Tax Governance”, Amsterdam Centre for Tax Law, March 2023

[5] See: https://www.ciat.org/ciat-y-microsoft-colaboran-en-la-transformacion-y-modernizacion-de-las-administraciones-tributarias-de-america-latina/

14,267 total views, 18 views today